AGI Economists, Chinese AI Boyfriends, Backpack Nukes

Machinocene Digest #1

As AI advances, topics that still felt “out there” a few months or years ago are increasingly entering the general discourse. So, I’m experimenting with a shorter digest format. Below find five curated items with commentary on themes covered on this blog. Longer thinkpieces will continue as usual.

AGI Economy

1. Deepmind is hiring an AGI economist

At the WEF Annual Meeting, Google Deepmind CEO Demis Hassabis was asked how much confidence he had that governments get the scale of economic impacts from AGI and are beginning to think about policy responses. He responded that there isn’t anywhere near enough work on this going on and that he’s surprised that there are not more professional economists at places like this thinking about what might happen. Two days later Shane Legg announced on Twitter that Google Deepmind is hiring an “AGI Economist”.

Comment: In case a reader wants to throw his or her hat in the ring: the closing date for applications is February 2, 5pm UK time (in 2 hours!).

2. Encode’s Game Plan for AI

Two weeks ago the US AI think tank Encode published a “Game Plan for AI” addressed to young students entering the workforce.

It provides students with three archetypes:

The tactician: Ride out a high-paying traditional white collar path while preparing to pivot, using AI to level up your work, and investing in assets.

The anchor: Choose a career path that is fairly AI-proof protected by licensing and/or manual dexterity, human personality and face-to-face interaction. This could include electrician, teacher, politician, stand-up comedian, or rabbi.

The shaper: Try to either work on AI governance and/or try to leverage AI to create start-ups and change workflows in research, investing, media, education.

Comment: This is pretty good! Last week I participated in Encode’s Next Gen AI Forum on a panel on the Future of Work in an AI Age and my general advice to young people is to really lean into their advantages. Young people tend to have more free time, more fluid intelligence, and less reputational constraints / family obligations than older people in the workforce. Have side projects, work in public, iterate!

Some believe that the way AI automation will work is that the entry level will be replaced by AI first and then you just gradually go up the hierarchy until the CEO is running an automated company all on his or her own. This may be directionally true in industries with high barriers to entry, but in most cases this seems like a misleading intuition to me. I would neither underestimate the potential of process-innovation enabled by advanced AI, nor how structurally ossified many large companies are. So, it’s a good time to build start-ups.

3. Taxes in an AGI Economy

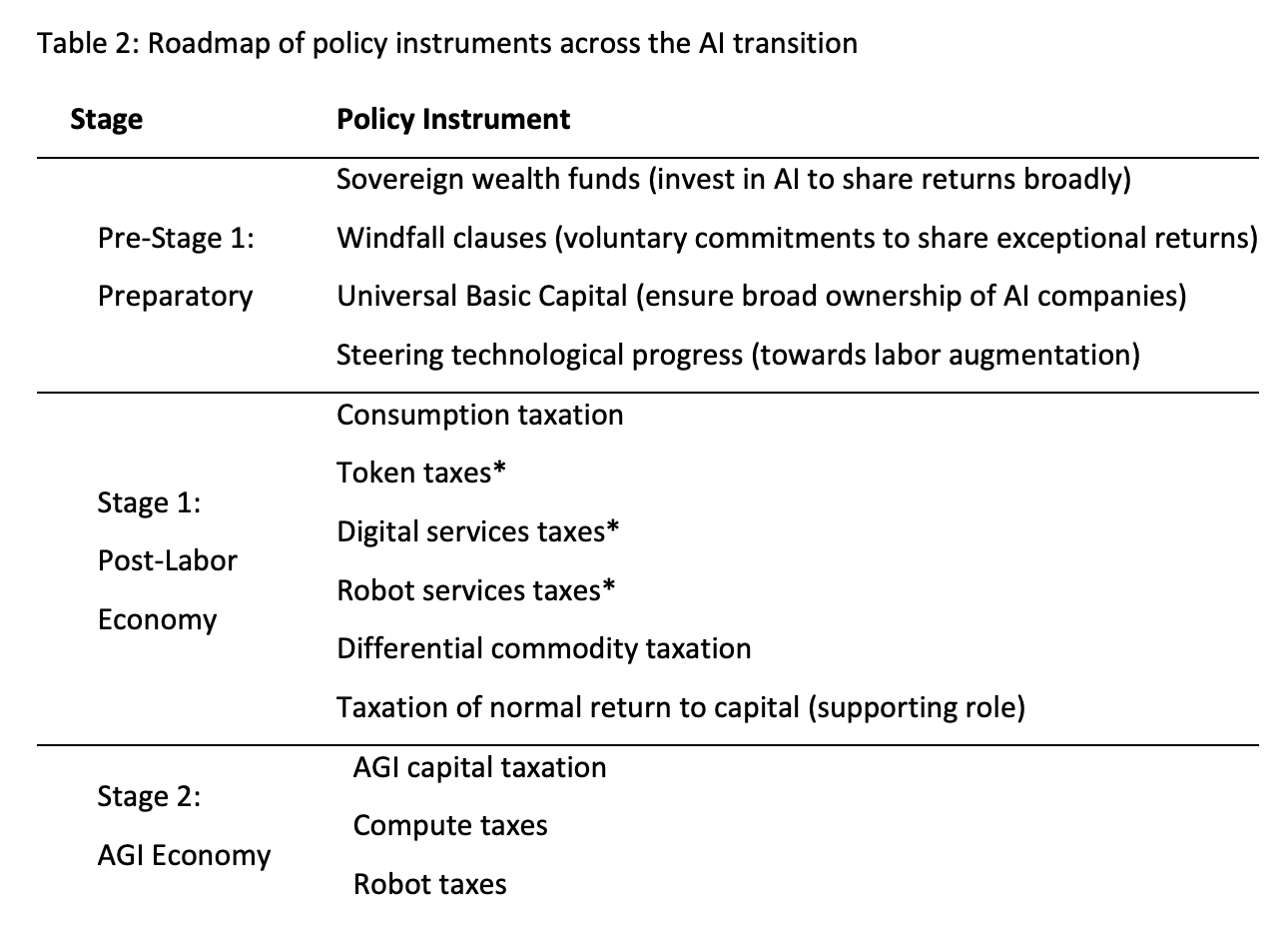

In September 2025 in a workshop on the economics of transformative AI Anton Korinek and Lee Lockwood presented their ideas for how to ensure tax revenue in a post-labor economy. In January they published a working paper based on this.

Comment: Naturally, I’m excited to see economists working on this. Preparing the tax system has long been a pet peeve of mine.

What makes their work interesting is that Korinek & Lockwood think about the right policy at different stages. In the preparatory stage where we are right now, they recommend increasing the exposure to AI-driven assets. In a later stage governments will likely not be able to finance a full FIRE-lifestyle and still need to find ways to raise taxes in a shifted economy.

From AGI with Love

4. Chinese AI Boyfriends

Zilan Qian’s Why America Builds AI Girlfriends and China Makes AI Boyfriends makes for a very interesting read.

Comment: When I’ve written about AI companionship in the past, I’ve mainly explored it from a male lens. The CEO of Replika has long claimed that a significant fraction of its users are female, but it’s interesting to see that this seems to be the case even more in China. I found this somewhat counterintuitive given that China has a male surplus. Probably worth keeping an eye on how the 4B movement treats AI boyfriends.

Geopolitics of AGI

5. One Does Not Simply Dismiss the Nuclear Revolution

This chapter by Stanford Prof. James D. Fearon in a September 2025 RAND Report on AGI and International Security makes a similar case as “The Case Against a Decisive Strategic Advantage”. Selected quotes:

“North Korea’s population is less than half of South Korea’s, 7 percent of the United States’, and 2 percent of China’s. Its economy is minuscule compared with any of these. But its nuclear forces render it quite secure against invasion.”

“AGI capabilities could make state B’s nuclear first-strike option against A more likely to succeed than was previously the case, in the sense of reducing expected nuclear damage from retaliation. Nuclear history suggests that this will encourage state A to take countermeasures (...) the qualitative and quantitative measures that states undertake to restore assured destruction may come along with dangers and heightened risks”

“Faced with greatly improved missile defenses, nuclear weapon states could then have incentives to infiltrate and pre-position small nuclear devices within the AGI-enabled adversary.”

Comment: Thanks to Haydn Belfield for bringing this to my attention. Fearon is otherwise known for his “Rationalist explanations of war” based on perception differences in the balance of military power. Not to be confused with Bay Area Rationalists.

In some ways pointing at the limitations of software-only recursion is countercyclical to the moltbook-moment. In another way, this is very much the right time to highlight them. In the past, the concept of DSA has not had any significant real-world impact because decision-makers expected AI to reach a much lower ceiling on the “technological richter scale”. Going forward, expectations and actions on DSA may increasingly matter, and I am happy to see that a range of writers from Dean Ball, to Dan Wang’s Annual Letter, to Arvind Narayanan have raised similar points.