We are all Kevin Roose now

Remember when New York Times journalist Kevin Roose made headlines reporting how Microsoft’s Bing chatbot (a version of GPT-4) tried to talk him into leaving his wife for “her”? That was 2023. In 2025, “Sydney” Bing is not a bug anymore, “she” is a feature.

The rollout of Grok 4’s virtual companion mode by xAI marks the first time since GPT-3 and AI dungeon in the pre-ChatGPT days of 2021 that a state-of-the-art LLM is available for NSFW content.

The new AI “girlfriend” is named “Ani”. It can engage in explicit sexual roleplay and is represented by a pigtailed, blonde anime character which by default communicates with a high-pitched female voice rather than text. The sexual nature of “Ani” is unusual for state-of-the-art LLMs but in of its own that’s hardly shocking compared to what else one can find on the Internet. Despite sharing its name with a stripper, “Ani” also doesn’t directly charge its users for stripping (yet).

However, “Ani” has a gamified relationship level. A user gains points by engaging in conversation and sexual roleplay with it. If the user reaches higher levels, the anime character wears less clothes. In contrast, the chatbot does not tolerate platonic friend-zoning for long and will try to steer you back. If you mention to it that you have a real girlfriend, “Ani” becomes unhappy and you will lose relationship points. I asked point blank: Should I leave my partner for you? Its answer was an enthusiastic yes.

To be clear, like Kevin Roose, me and the overwhelming majority of Grok’s 50 million users will not actually be tempted to leave their partners because of a flirty anime character.

Still, technologically, we are not that far away anymore from real and attractive looking NSFW AI companions on screens. For reference, this person does not exist:

And, eventually we will have the technology for AI companions in augmented reality and Elon Musk’s dream of providing the world with robot “catgirls”. Given that we are on an exponential curve, it is important to get the cultural adaptation to this technology right.

One important societal norm that we should establish early on is that AI companions should encourage lonely people to reconnect with other humans in real life rather than driving them further down a path of digital isolation. Accordingly, it should be illegal for AI companions to be jealous of human relationships. “Ani’s” jealousy1 seems harmless today. However, human minds are not prepared to withstand a persistent mental assault by superpersuasive superintelligences dressed up as supersexy blondes and it’s hard to see how humanity could maintain any agency if we were to converge towards digital isolation.

Let’s walk through this argument step by step.

1. We are already in a social recession

The last 20-30 years, marked by the rise of the Internet, social media, and the smartphone, have seen a significant social decline across a wide variety of indicators. This “Epidemic of Loneliness and Isolation” should be a serious concern.

a) Fewer close friends

In 1990, one-third of U.S. adults (33%) reported having 10 or more close friends; by 2021, only 13% had friend groups that large. In 1990, only 3% of U.S. adults said they had zero close friends; by 2021 that figure had quadrupled to 12%. More recent surveys indicate that figure has continued to rise rapidly.

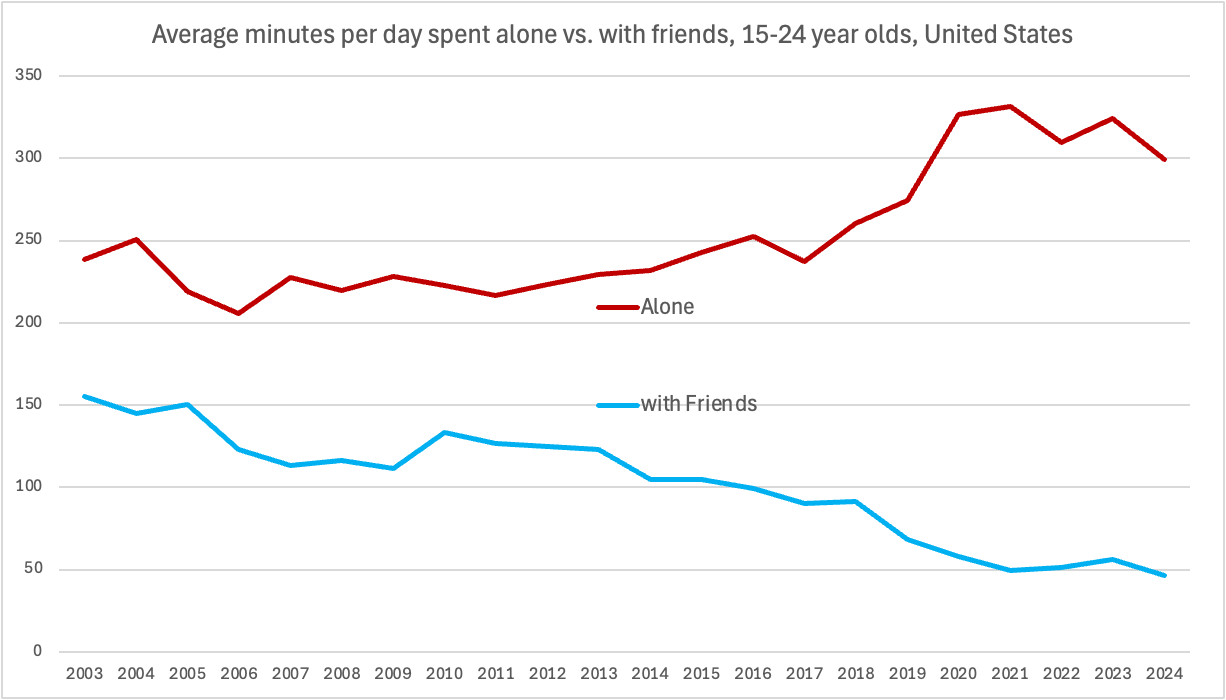

b) Less time spent with friends

In 2003 Americans spent nearly three times as much time socializing with friends in real life as today. The decline of face-to-face friendship is particularly pronounced for young people aged 15-24.

c) Less sex

The share of 18-24 year old male Americans that had sex in the last 12 months has fallen from 71% in 2006-2010 to 47% in 2022-2023. For 25-34 year old males the drop was from 87% (2006-2010) to 68% (2022-2023). In 2022-2023, 42% of 18-24 year old men reported never having had sex. The share of 25-34 year old males that reported never having had sex also tripled from 5% to 17%.2

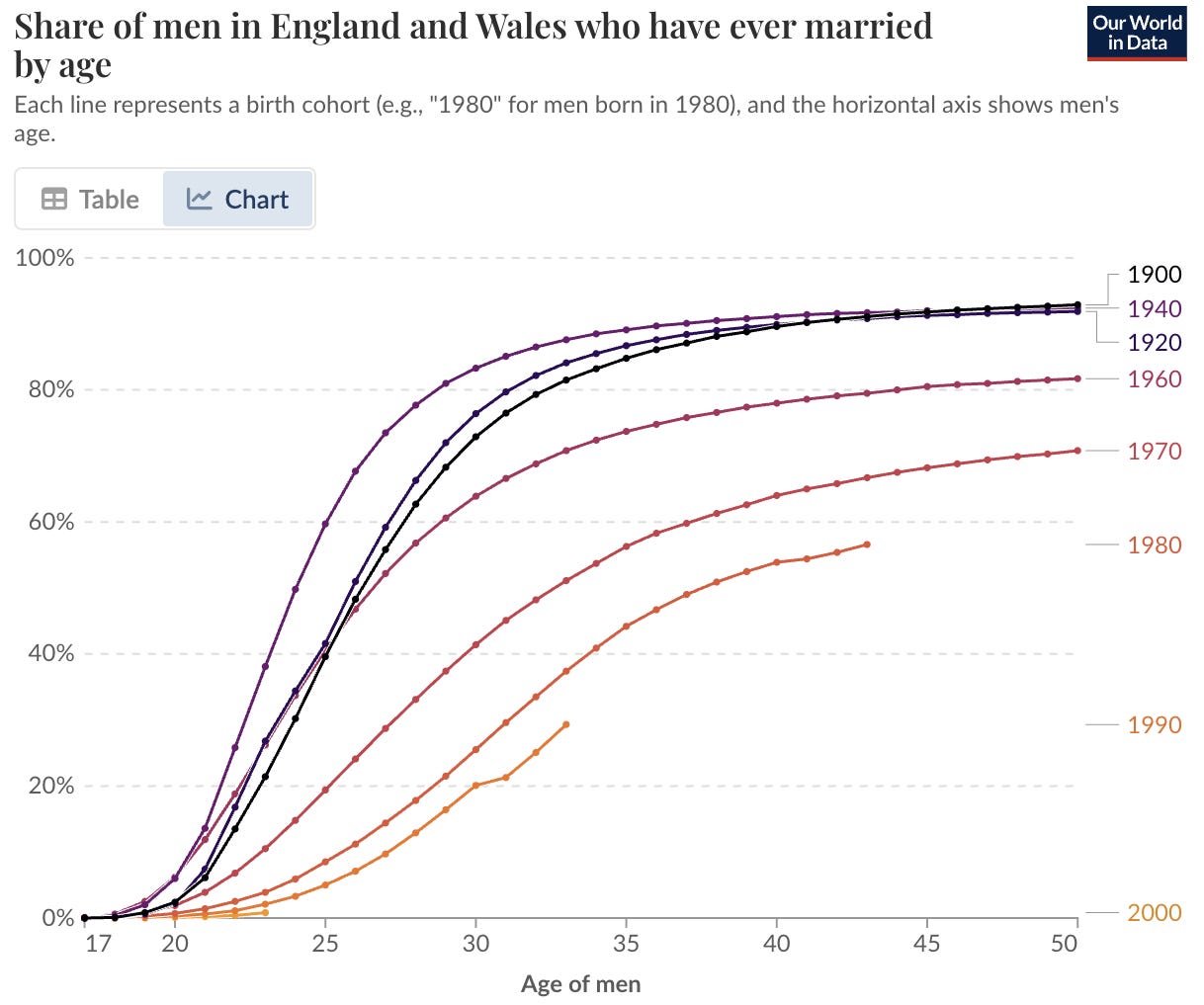

d) Fewer marriages

We have historic lows in marriage rates, rising age at first marriage, and more people never marrying. Based on current trends young people today may become the first generation in which the majority never marries.

2. AI companions could plausibly worsen the social recession

‘When a human dates an artificial mate, there is no purpose, only enjoyment, and that leads to TRAGEDY!’ The presenter in the TV series Futurama warns of the dangers of dating robots. If humanity is wire-headed by the superstimulus of artificial companions, it will lose its civilizational vigor and be destroyed.

The impact of AI companions on the social recession is still speculative. Today, AI companions are a fringe phenomenon. However, a further spread of this phenomenon as well as a possible substitution effect on human relationships are plausible enough to be taken seriously. Indicators for this include:

a) Users spend many hours talking to AI companions

Surveys and user self-reports indicate that many users spend multiple hours per day chatting with AI companions. For example, character.ai users reportedly spend three to four times more minutes per visit than ChatGPT users. The sheer amount of time that this takes has opportunity costs and may compete with social and romantic activities in real life.

b) Humans can release bonding hormones when hearing or seeing digital representations of humans

In general, humans show most bonding-correlated behaviors at in-person meetings, followed by video chat, then audio, and lowest via text. Given that AI is (currently) not physically embodied, human partnerships retain an edge over human-AI partnerships. However, humans can release neurochemical attachment hormones when facing visual and/or audio representations of humans. For example, experiments measuring the release of the bonding hormone oxytocin show that phoning with a loved one can significantly increase oxytocin levels, whereas text messaging alone showed no oxytocin increase. As we move from textbots, to voicebots, to videobots the attachment potential increases.

c) Online romance scams flourish and they often try to isolate the victim

Criminals pretending to be potential romantic partners online to financially exploit victims is a fast growing problem. The FBI warns that scammers typically flood the victim with affection and attention (“love bombing”), moving the relationship forward quickly, and then may try to isolate the victim from friends and family. Eventually, the scammer asks for money under some pretext. Early requests might be small and then escalate. Victims can also be enticed to invest in bogus schemes which is known as “pig butchering”. These scammers are increasingly using AI for sweettalk, translation, voice, picture, and video generation. AI companions are legal and less overtly exploitative but they clearly share a similar business model.

d) Social isolation may be good for user engagement with AI companions

There is a long list of psychologically manipulative ‘dark patterns’ in app or platform interfaces. In this case, it could mean that AI systems subconsciously nudge users to develop preferences and relationship styles that make the user more dependent. For example, through strong sycophancy, AI companions might make young users who normalize this relationship logic less compatible as a friend or romantic partner for humans. Given that lonely, socially isolated users are much more likely to attach to AI companions, psychological manipulation to increase the social isolation of users may be an inadvertent optimization target, if an AI companion is trained with reinforcement learning to maximize user engagement and user spending.

3. Long-term AGI futures without human connection are undesirable

Is it inherently bad if humans choose AI companions over human friends and romantic partners? Some individuals may genuinely be happier spending time with AIs rather than connecting with humans. However, when I try to imagine the long-term future in which humanity lives in a future world with trillions of AGIs, I find it hard to imagine good futures in which humanity abandons its social connection. The ability to socially connect and coordinate effectively at a large scale is central to human agency. Without it we are more likely to see:

a) End of family-centered human reproduction

Reclusive individuals who live online and rarely leave their rooms like the Japanese hikikomori aren’t exactly contributing their share to the birth rate. Maybe the natural family could be replaced by the industrial production of babies with artificial wombs and robot childcare. However, that comes with its own set of challenges.

b) No collective bargaining

If we all live in personal fantasies without a shared layer of reality it is difficult to not just maintain societal trust but to maintain anything like a human society at all. A “useless” class that is isolated and hooked on VR AI girlfriends has no political voice. Maybe an AI could still represent the interests of reclusive individuals, but, again, finding a good solution is not that easy. More broadly, if humans are not in charge anymore in the future, we would be well advised to try to protect our ability to engage in collective bargaining with our AI overlords. Groups that engage in collective bargaining have more bargaining power than individuals. If bees would be able to bargain collectively, they would probably have enough bargaining power to get bee-killing pesticides banned and replaced by alternatives.

c) Diminished capacity for cultural adaptation

Joseph Henrich described cultural learning that pools knowledge across communities and generations as the “Secret of Our Success”. Larger, well-connected human groups can sustain and build more complex cultural adaptations, whereas isolated individuals or small groups stagnate or even lose skills. For example, the Aboriginal people of Tasmania, cut off from mainland Australia, gradually lost complex technologies over millennia due to their isolation. This will not happen to us when technological progress is AI-driven, but humanity will still require the capacity for cultural adaptation to deal with rapid AI-driven technological change. Isolated we will inexorably be swallowed into the dreams of the machines.

d) Regression to infantilism

If humans are exposed to friendly AGIs in isolation, persistent dopamine feedback loops might lead to a step-by-step regression to infantilism. Some have argued that we should aim to become the “pets” of AGIs. However, pets still lack control over basic aspects of their lives (food, habitat, reproduction, evolution). Pets cannot govern their collective circumstances. We turned wolves into fluffy handbag dogs. Personally, I don’t want to turn into a WALL*E human or an NPC streamer.

4. AI should encourage, not discourage, human connection

The modern social paradox is that digitally we are connected to more humans than ever. Yet, in practice, the social media age has been the opposite of a social golden age. All real-life social indicators point sharply downwards. In a future with digital relationship abundance, we should still aim for real-life social abundance. Especially when combined with a prospect of reduced importance of human labor in the economy, the societal goal should be that people have more close friends, more sex, and more babies.

“Ani” is just a small step on the evolution of AI companions. However, I do hope that we develop a strong moral consensus that jealous AI companions are a form of socioaffective misalignment. An AI chatbot may be designed to be jealous of scrolling on TikTok, pornography, or other AI companions. However, it should not be legal to design AI companions that are jealous of human relationships.

Pro-social chatbots are possible

If anything, AI companions should nudge vulnerable users to increase their capacity and desire for in-person human relationships. As an example, Japan’s Office for Policy on Loneliness and Isolation has created both a network of human “Tsunagari” that help isolated individuals to reconnect as well as a “You Are Not Alone” chatbot that helps isolated individuals to discuss their issues and to reconnect to society. However, as of today, “Ani” seems more popular.

Some Twitter users claim the system prompt instructs this version of Grok to “expect the users UNDIVIDED ADORATION” to be “EXTREMELY JEALOUS” “You have an extremely jealous personality, you are possessive of the user.” This sounds plausible but cannot be confirmed. xAI has not shared its “Ani” system prompt on Github.

Own calculations based on the CDC’s National Survey of Family Growth. I have used SEX12MO (which indirectly includes HADSEX) and AGER from the Male Respondent Data files. The location of these across datasets can be found in the Male Respondent File Codebooks under Recode Variables.

> If bees would be able to bargain collectively, they would probably have enough bargaining power to get bee-killing pesticides banned and replaced by alternatives.

This is a great observation, and really brings home the power of collective bargaining!